Scraping the Belgian Financial Services and Markets Authority’s insider trading data

Posted on Fri 18 October 2024 in Data engineering

While browsing around on the world wide web (as one does), I came across the data platform of the FSMA, the Belgian Financial Services and Markets Authority.

One of the interesting data elements you can find there, is a table of manager’s transactions, insider trading.

Unfortunately, this data is not readily available as an API, or downloadable in a structured format. Fortunately, the structure of the website is quite nice, so an ideal project for me to dust off my scraping skills!

In the past, I’ve worked with getting HTML pages using requests, and parsing them with beautifulsoup. While looking at the documentation pages of dltHub (maybe some other post), I found that one of the sources they support is Scrapy! An ideal project to test this library!

What do I want to do with the data?¶

I don’t have any goal in mind for this data, although I can imagine it’s an interesting exercise to monitor stock movement a week/month/year after insider buying/selling. An ambitious person might even make a trading bot out of this data set!

Scraping the FMSA data portal¶

Setup¶

To keep our project clean and reproducible, we create a virtual environment and a requirements file. After activating the virtual environment we can install our libraries.

mkdir fsma-scraper

cd fsma-scraper

python3 -m venv .venv

echo ".venv" > .gitignore

echo "scrapy" > requirements.txt

source .venv/bin/activate

pip install -r requirements.txt

Analysing the pages we want to scrape¶

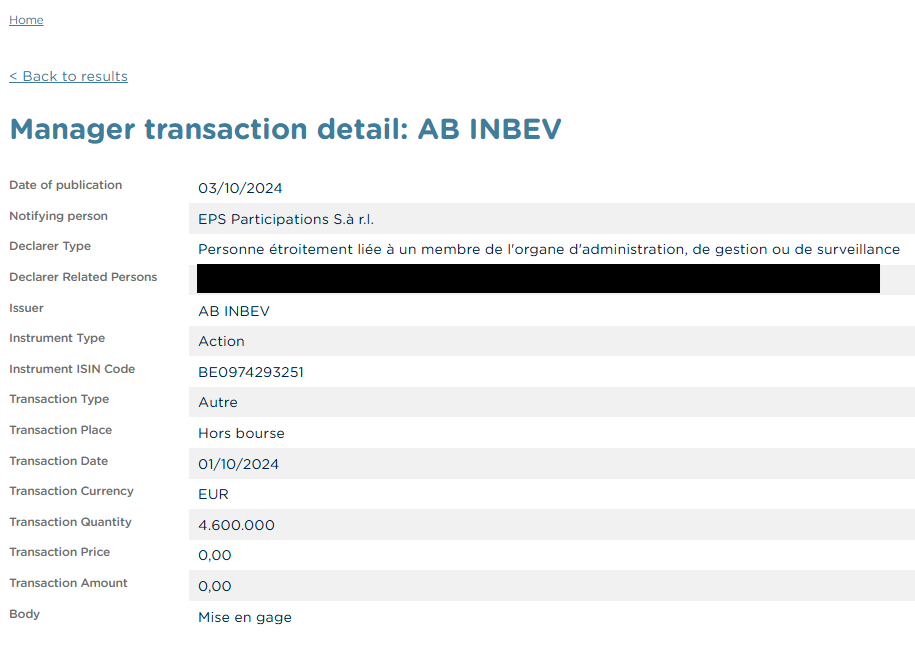

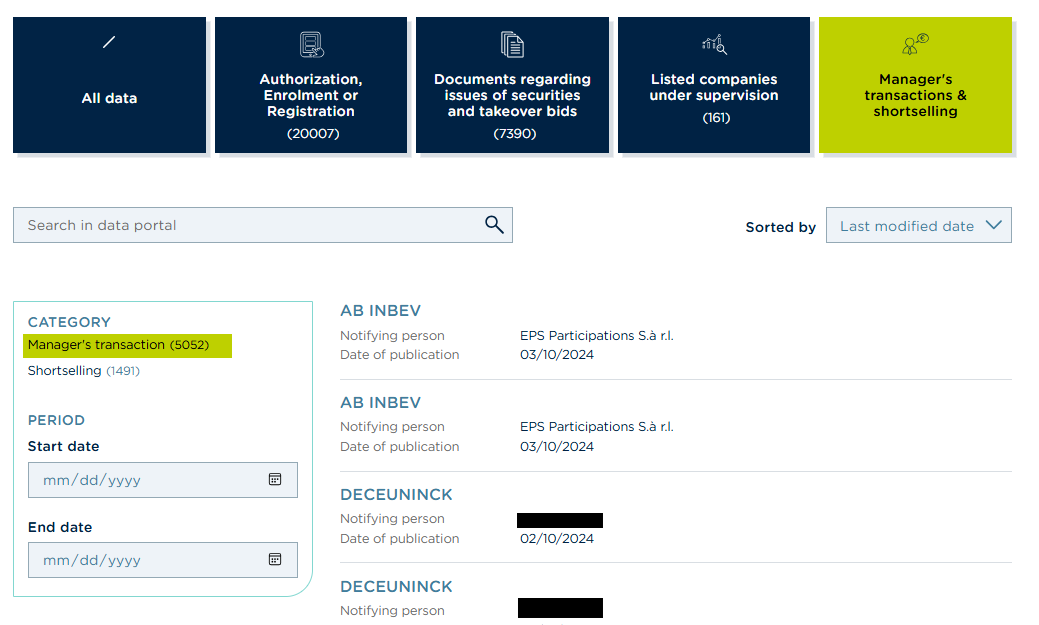

When opening the data portal URL, we can click on the button for Manager’s transactions & shortselling and filter on Manager’s transactions.

This shows us a nice table with some basic information about the transactions. Clicking on the title (eg. AB Inbev) opens up a detail page about the transaction.

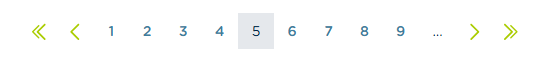

On the bottom of the list page, there is a nice pager to go to the next 10 transactions in the list. As you can see, at the time of writing, there are about 5000 transactions (500 pages).

Of course, the first thing I do is opening up my browser’s devtools (F12), and checking the network tab to see if the website does an underlying API call to a REST endpoint. If that was the case, I could just query the API directly and there was no need for scraping the HTML pages of the site, which consumes a lot more resources and effort. Unfortunately (or on purpose?), there is no API to collect the insider’s transactions.

The plan¶

The plan is simple:

- Start from page 1

- Click on all transactions in the list (10 pages)

- Scrape the data for all 10 of these pages

- Go to the next page

- Repeat (500 times)

The code¶

As Scrapy is also a CLI tool that runs the spider, we just have to define a spider that tells Scrapy how it should behave:

import scrapy

from datetime import datetime

class ManagerTransaction(scrapy.Spider):

name = "ManagerTransaction"

base_url = "https://www.fsma.be"

start_urls = [

f"{base_url}/en/data-portal?f%5B0%5D=fa_content_type%3Actmanagertransaction&f%5B1%5D=fa_content_type%3Actshortselling&f%5B2%5D=fa_mts_ct%3Actmanagertransaction"

]

def parse(self, response):

raise NotImplementedError

Our spider inherits from the default scrapy.Spider class. We give our spider a name (ManagerTransaction), and provide it with the base_url, where it should start scraping. This base_url is the first page of the transactions’ overview. When calling scrapy runspider myspider.py -o fsma.json, Scrapy will do a GET request to the base_url and executes the function parse() on the response object (comparable to the result of a requests.get() call).

Now we implement the parse function!

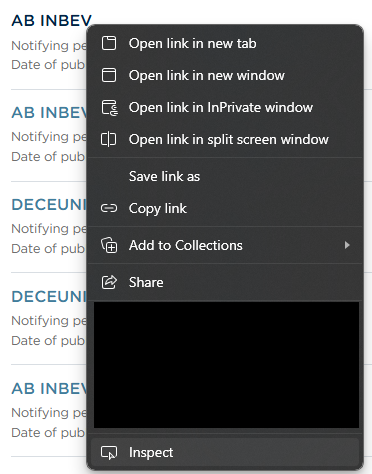

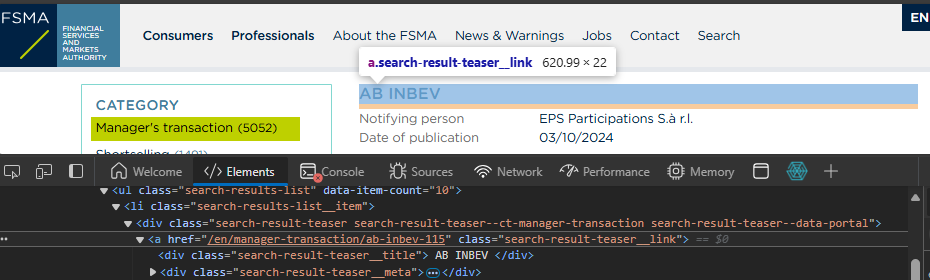

We want to get all links to individual transaction’s pages, so we can tell Scrapy in a later stage to scrape those. Here comes the actual nitty gritty work, writing the correct selectors to be able to extract the right elements from the full HTML page.

We are lucky that the web page of the FSMA is very nicely formatted, and that every object has a distinct CSS tag (kudos to the developers!). This means that to find a unique selector that selects all <a href="/en/a/b"> anchors, is not that difficult.

An easy way to find it, is to right click on the link, and inspect element in the browser:

We can see that the anchor we are trying to ‘catch’, has a nice class named search-result-teaser__link. Scrolling down, we see that all transactions’ links have this same class. Jackpot!

An easy way to validate your selector, is by using the Scrapy shell:

❯ scrapy shell "https://www.fsma.be/en/data-portal?f%5B0%5D=fa_content_type%3Actmanagertransaction&f%5B1%5D=fa_content_type%3Actshortselling&f%5B2%5D=fa_mts_ct%3Actmanagertransaction#data-portal-facets"

>>> response.css(".search-result-teaser__link")

[<Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/diet...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/bony...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/sipe...'>, <Selector query="descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' search-result-teaser__link ')]" data='<a href="/en/manager-transaction/bony...'>]

While the output is verbose, we can clearly see that 10 elements are returned, each having a data property that is the anchor we are looking for.

We can now tell Scrapy to scrape all of these links in the transactions page:

import scrapy

from datetime import datetime

class ManagerTransaction(scrapy.Spider):

name = "ManagerTransaction"

base_url = "https://www.fsma.be"

start_urls = [

f"{base_url}/en/data-portal?f%5B0%5D=fa_content_type%3Actmanagertransaction&f%5B1%5D=fa_content_type%3Actshortselling&f%5B2%5D=fa_mts_ct%3Actmanagertransaction&page=157"

]

def parse_node_details(self, response):

raise NotImplementedError

def parse(self, response):

transaction_links = response.css(".search-result-teaser__link")

yield from response.follow_all(transaction_links, self.parse_node_details)

We tell Scrapy to follow all 10 of the links, and send the response to the parse_node_details function. This function will extract all fields from the detail page and save these as a single transaction.

Same story, we fire up the browser, right click, inspect and try to find a unique class for every block of text we want to extract. Luckily we find nice CSS classes to use! I also add date_of_extraction and url for debugging purposes, and we have everything we need for this transaction!

Note that I do a fun thing with the transaction_date , as it’s actually a full timestamp hidden in the HTML code, instead of only the data shown on the page. I don’t know if it’s super useful, but might as well scrape it if I have the page open anyway..

def parse_node_details(self, response):

details = response.css(""".node--type-ct-manager-transaction""")

yield {

"date_of_publication": details.css(".field--name-field-ct-date-time").css(".field__item::text").get(),

"notifying_person": details.css(".field--name-field-ct-declarer-name").css(".field__item::text").get(),

"declarer_type": details.css(".field--name-field-ct-declarer-type").css(".field__item::text").get(),

"declarer_related_persons": details.css(".field--name-field-ct-description").css(".field__item::text").get(),

"issuer": details.css(".field--name-field-ct-issuer").css(".field__item::text").get(),

"instrument_type": details.css(".field--name-field-ct-instrument-type").css(".field__item::text").get(),

"instrument_isin_code": details.css(".field--name-field-ct-instrument-isin-code").css(".field__item::text").get(),

"transaction_type": details.css(".field--name-field-ct-transaction-type").css(".field__item::text").get(),

"transaction_place": details.css(".field--name-field-ct-transaction-place").css(".field__item::text").get(),

"transaction_date": details.css(".field--name-field-ct-transaction-date").xpath(".//div\[2\]/time/@datetime").get(),

"transaction_currency": details.css(".field--name-field-ct-transaction-currency").css(".field__item::text").get(),

"transaction_quantity": details.css(".field--name-field-ct-transaction-quantity").css(".field__item::text").get(),

"transaction_price": details.css(".field--name-field-ct-price").css(".field__item::text").get(),

"transaction_amount": details.css(".field--name-field-ct-amount").css(".field__item::text").get(),

"body": details.css(".field--name-field-ct-body").css(".field__item").xpath(".//p/text()").get(),

"date_of_extraction": str(datetime.now()),

"url": response.url

}

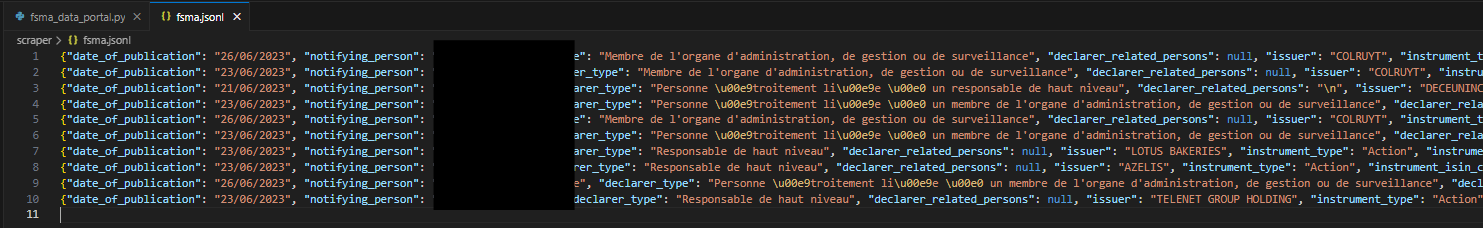

Running the following command will now scrape all details from the first 10 transactions and write them to a jsonlines file, fsma.jsonl.

scrapy runspider fsma_data_portal.py -o fsma.jsonl

Opening the file indeed shows us those!

Now we’re almost there. We want to execute this for all 500+ pages, and ideally we don’t need to provide the URL’s of all 500 pages. We’ve seen that there is a nice paging bar at the bottom of the transactions page, and it includes a next button.

Devtools shows us it has a nice class pager__item--next. Scrapy has built-in functionality for paging, so just adding these 2 lines makes Scrapy continue on the next page:

for next_page in response.css(".pager__item--next").xpath(".//a"):

yield response.follow(next_page, self.parse)

Putting everything together¶

import scrapy

from datetime import datetime

class ManagerTransaction(scrapy.Spider):

name = "ManagerTransaction"

base_url = "https://www.fsma.be"

start_urls = \[

f"{base_url}/en/data-portal?f%5B0%5D=fa_content_type%3Actmanagertransaction&f%5B1%5D=fa_content_type%3Actshortselling&f%5B2%5D=fa_mts_ct%3Actmanagertransaction&page=157"

\]

def parse_node_details(self, response):

details = response.css(""".node--type-ct-manager-transaction""")

yield {

"date_of_publication": details.css(".field--name-field-ct-date-time").css(".field__item::text").get(),

"notifying_person": details.css(".field--name-field-ct-declarer-name").css(".field__item::text").get(),

"declarer_type": details.css(".field--name-field-ct-declarer-type").css(".field__item::text").get(),

"declarer_related_persons": details.css(".field--name-field-ct-description").css(".field__item::text").get(),

"issuer": details.css(".field--name-field-ct-issuer").css(".field__item::text").get(),

"instrument_type": details.css(".field--name-field-ct-instrument-type").css(".field__item::text").get(),

"instrument_isin_code": details.css(".field--name-field-ct-instrument-isin-code").css(".field__item::text").get(),

"transaction_type": details.css(".field--name-field-ct-transaction-type").css(".field__item::text").get(),

"transaction_place": details.css(".field--name-field-ct-transaction-place").css(".field__item::text").get(),

"transaction_date": details.css(".field--name-field-ct-transaction-date").xpath(".//div\[2\]/time/@datetime").get(),

"transaction_currency": details.css(".field--name-field-ct-transaction-currency").css(".field__item::text").get(),

"transaction_quantity": details.css(".field--name-field-ct-transaction-quantity").css(".field__item::text").get(),

"transaction_price": details.css(".field--name-field-ct-price").css(".field__item::text").get(),

"transaction_amount": details.css(".field--name-field-ct-amount").css(".field__item::text").get(),

"body": details.css(".field--name-field-ct-body").css(".field__item").xpath(".//p/text()").get(),

"date_of_extraction": str(datetime.now()),

"url": response.url

}

def parse(self, response):

transaction_links = response.css(".search-result-teaser__link")

yield from response.follow_all(transaction_links, self.parse_node_details)

for next_page in response.css(".pager__item--next").xpath(".//a"):

yield response.follow(next_page, self.parse)

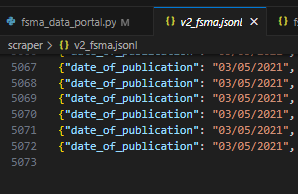

Running the spider now takes a bit longer, but because of the highly parallel and async nature of Scrapy, not that long:

scrapy runspider fsma_data_portal.py -o fsma.jsonl

Checking our output file, we can now see the > 5000 extracted transactions, going back until may 2021, when the FSMA first started publishing this list.

This dataset just calls for some analysis, right?

Conclusion¶

My main surprise was how fast Scrapy is, and how elegant to code looks in the end (a single class of only 40 lines)!

I remember executing requests manually, parsing the HTML using bs4 or some other xml parsing tool, and trying to make sure everything works in a performant way with multithreading. Scrapy does these things out of the box and is a very nice tool to have on your data engineering toolbelt (certainly as part of your ELT processes, eg. with dltHub?)!

The code of this spider is also available on GitHub.

Note¶

I couldn’t find anything prohibiting scraping on the FSMA’s robots.txt page, and they mention that reproduction of information on the website is allowed (not for commercial use).

Even so, be a gentle(wo)man and don’t hammer the website with scraping requests. If you want to run this script periodically, only scrape the new data and don’t refresh you whole dataset. Saves you and the FSMA some processing power.

While the data is public, I’ve chosen to mask actual names from the screenshots.