Analyzing the Belgian Crossroadsbank for Enterprises open data with dbt and DuckDB part 1 — loading the data

Posted on Sat 12 October 2024 in Data engineering

While looking for interesting datasets to play around with DuckDB, I came across the ‘open’ data of the Belgian Crossroadsbank for Enterprises (abbrivated in Dutch to KBO). The dataset is free to download, but you need to register beforehand.

You can download either the full dataset, or the incremental dataset every month. The full dataset is a 240MB zip file containing 10 csv files.

My goal is to use dbt to load this data in DuckDB (dbt is the E in L, right?), do some interesting analysis on top of it, and maybe expose the data as a Streamlit application.

All code in this article can be found on Github. The data can be downloaded from the offical source.

Exploring the data¶

Let’s quickly explore the downloaded zip file. Using unzip , we can list the files inside:

> unzip -l KboOpenData_0128_2024_10_Full.zip

Archive: KboOpenData_0128_2024_10_Full.zip

Length Date Time Name

--------- ---------- ----- ----

88155847 2024-10-06 08:51 enterprise.csv

68820875 2024-10-06 08:51 establishment.csv

150209614 2024-10-06 08:51 denomination.csv

292002916 2024-10-06 08:52 address.csv

31857265 2024-10-06 08:52 contact.csv

912198724 2024-10-06 08:52 activity.csv

308904 2024-10-06 08:52 branch.csv

149 2024-10-06 08:52 meta.csv

1356938 2024-10-06 08:52 code.csv

--------- -------

1544911232 9 files

The definition of these csv’s is quite well defined in the cookbook supplied by the FPS Economy (FOD Economie), although in a pdf and not in a structured format.

We can also check the number of records in each file:

❯ unzip -l KboOpenData_0128_2024_10_Full.zip

❯ ls *.csv | xargs -I X wc -l X | sed 's|\\s|\\t\\t|g'

20488460 activity.csv

2774975 address.csv

7355 branch.csv

14949 code.csv

652301 contact.csv

3236485 denomination.csv

1904225 enterprise.csv

1638592 establishment.csv

6 meta.csv

Getting started¶

Setting up the dbt project¶

First, we set up our working directory. We create a python virtual environment in it, setup our requirements.txt file, and install our python dependencies:

mkdir be_kbo_analysis

cd be_kbo_analysis

python3 -m venv .venv

echo ".venv" >> .gitignore

echo "dbt-core" >> requirements.txt

echo "dbt-duckdb" >> requirements.txt

# Activate the venv

source .venv/bin/activate

pip install -r requirements.txt

Use dbt init kbo to create a dbt project called kbo and select duckdb as database. The default values in the newly created profiles.yaml are fine (DuckDB will write to a databasefile named dev.duckdb).

Let’s start by editing our dbt_project.yml file.

cd kbo

mkdir models/001_staging

code dbt_project.yml

We add a variable kbo_files_root_path, pointing to our unzipped folder, in my case the folder be_kbo_analysis in my home directory. I’m also going to make a 001_staging folder inside models that will contain the definitions of the models, directly imported from csv. To make things more performant, I will materialize the models inside that folder as table.

I prefer numbering my folders to keep some structure, but they don’t affect the dbt project at all. For big dbt projects, try grouping models based on common code owners, models that run at the same time or models that belong in the same target schema. The organisation of big dbt projects deserves a separate post.

dbt_project.yml now looks like this:

name: 'kbo'

version: '1.0.0'

profile: 'kbo'

model-paths: ["models"]

analysis-paths: ["analyses"]

test-paths: ["tests"]

seed-paths: ["seeds"]

macro-paths: ["macros"]

snapshot-paths: ["snapshots"]

clean-targets:

- "target"

- "dbt_packages"

vars:

kbo_files_root_path: "{{ env_var('HOME') }}/be_kbo_analysis"

models:

kbo:

staging:

+materialized: table

Loading the csv files in DuckDB¶

As dbt ‘only’ sends rendered sql to the database engine, and duckdb is very capable in reading from csv files, we should be able to just create a model per csv file, and select * from it.

Let’s first scaffold the sql files:

ls ../*.csv | xargs -I X basename X .csv | xargs -I X touch models/001_staging/X.sql

For every csv file, this creates an empty file which we will fill up with:

# models/001_staging/activity.sql

select * from read_csv('{{ var("kbo_files_root_path") }}/activity.csv')

# models/001_staging/enterprise.sql

select * from read_csv('{{ var("kbo_files_root_path") }}/enterprise.csv')

# etc etc

Now let’s try running dbt! Make sure you are in the dbt project folder (in our case, kbo ), and execute dbt run .

❯ dbt run

13:36:46 Running with dbt=1.8.7

13:36:47 Registered adapter: duckdb=1.9.0

13:36:48 Found 9 models, 416 macros

13:36:48

13:36:48 Concurrency: 1 threads (target='dev')

13:36:48

13:36:48 1 of 9 START sql table model main.activity ..................................... [RUN]

13:38:03 1 of 9 OK created sql table model main.activity ................................ [OK in 74.73s]

13:38:03 2 of 9 START sql table model main.address ...................................... [RUN]

13:38:31 2 of 9 OK created sql table model main.address ................................. [OK in 28.38s]

13:38:32 3 of 9 START sql table model main.branch ....................................... [RUN]

13:38:32 3 of 9 OK created sql table model main.branch .................................. [OK in 0.65s]

13:38:32 4 of 9 START sql table model main.code ......................................... [RUN]

13:38:33 4 of 9 OK created sql table model main.code .................................... [OK in 0.74s]

13:38:33 5 of 9 START sql table model main.contact ...................................... [RUN]

13:38:36 5 of 9 OK created sql table model main.contact ................................. [OK in 3.55s]

13:38:37 6 of 9 START sql table model main.denomination ................................. [RUN]

13:38:50 6 of 9 OK created sql table model main.denomination ............................ [OK in 13.88s]

13:38:50 7 of 9 START sql table model main.enterprise ................................... [RUN]

13:38:58 7 of 9 OK created sql table model main.enterprise .............................. [OK in 7.56s]

13:38:58 8 of 9 START sql table model main.establishment ................................ [RUN]

13:39:01 8 of 9 OK created sql table model main.establishment ........................... [OK in 2.79s]

13:39:01 9 of 9 START sql table model main.meta ......................................... [RUN]

13:39:02 9 of 9 OK created sql table model main.meta .................................... [OK in 0.70s]

13:39:02

13:39:02 Finished running 9 table models in 0 hours 2 minutes and 13.94 seconds (133.94s).

13:39:02

13:39:02 Completed successfully

13:39:02

13:39:02 Done. PASS=9 WARN=0 ERROR=0 SKIP=0 TOTAL=9

Hooray! We’ve just created 9 tables inside the dev.duckdb database. As you might notice, my PC where I’m running this on, is very slow. However, once these tables are loaded, I’m hoping to use the power of DuckDB to make my analysis fast!

A fun tidbit is to check the file size of the DuckDB database, which is bigger than the original zip file (240MB vs 370MB):

❯ ls -lah dev.duckdb

-rw-r--r-- 1 wout wout 371M Oct 12 15:39 dev.duckdb

Documenting our staging tables¶

Every of our 9 models, should be nicely documented and tested. Because the data now exists in our DuckDB database, we quickly scaffold the yamls for our models using dbt-osmosis .

First, add a config to our dbt_project.yml :

models:

kbo:

+dbt-osmosis: "_{model}.yml"

Second, install the tool and let it do it’s magic:

pip install dbt-osmosis

dbt-osmosis yaml refactor

This generates 1 yml file per model, which we can use to describe the model. We can, for example, add descriptions (coming from the cookbook).

We can also add some tests!

According to the cookbook, the NaceVersion is always either 2003 or 2008. The same source tells us that the field NaceCode should be found in the code table. We can add these validations as tests.

An example in models/001_staging/_activity.yml:

version: 2

models:

- name: activity

description: "Bevat 1 lijn per activiteit van een entiteit of vestigingseenheid. Een entiteit of vestigingseenheid kan meerdere activiteiten uitoefenen"

columns:

- name: EntityNumber

description: 'Het vestigingseenheids- of ondernemingsnummer.'

data_type: VARCHAR

- name: ActivityGroup

description: 'Soort activiteit. Zie codetabel'

data_type: VARCHAR

- name: NaceVersion

description: 'Geeft aan of de activiteit is gecodeerd in Nace versie 2003 of Nace versie 2008.'

data_type: BIGINT

tests:

- accepted_values:

values: ['2003', '2008']

- name: NaceCode

description: 'De activiteit van de entiteit of vestigingseenheid, gecodeerd in een Nace code (in de aangegeven versie). Zie codetabel (Nace2003, Nace2008).'

data_type: VARCHAR

tests:

- relationships:

to: ref('code')

field: Code

- name: Classification

description: 'Geeft aan of dit een hoofd-, neven- of hulpactiviteit is. Zie codetabel.'

data_type: VARCHARCreating dbt docs

To run these 2 tests, we can execute dbt test:

❯ dbt test

14:18:36 Running with dbt=1.8.7

14:18:37 Registered adapter: duckdb=1.9.0

14:18:37 Found 9 models, 2 data tests, 416 macros

14:18:37

14:18:38 Concurrency: 1 threads (target='dev')

14:18:38

14:18:38 1 of 2 START test accepted_values_activity_NaceVersion__2003__2008 ............. [RUN]

14:18:38 1 of 2 PASS accepted_values_activity_NaceVersion__2003__2008 ................... [PASS in 0.36s]

14:18:38 2 of 2 START test relationships_activity_NaceCode__Code__ref_code_ ............. [RUN]

14:18:39 2 of 2 PASS relationships_activity_NaceCode__Code__ref_code_ ................... [PASS in 1.22s]

14:18:40

14:18:40 Finished running 2 data tests in 0 hours 0 minutes and 2.17 seconds (2.17s).

14:18:40

14:18:40 Completed successfully

14:18:40

14:18:40 Done. PASS=2 WARN=0 ERROR=0 SKIP=0 TOTAL=2

Looks great! Always run your tests before building on your data, so you know that your incoming data is clean and ready to use.

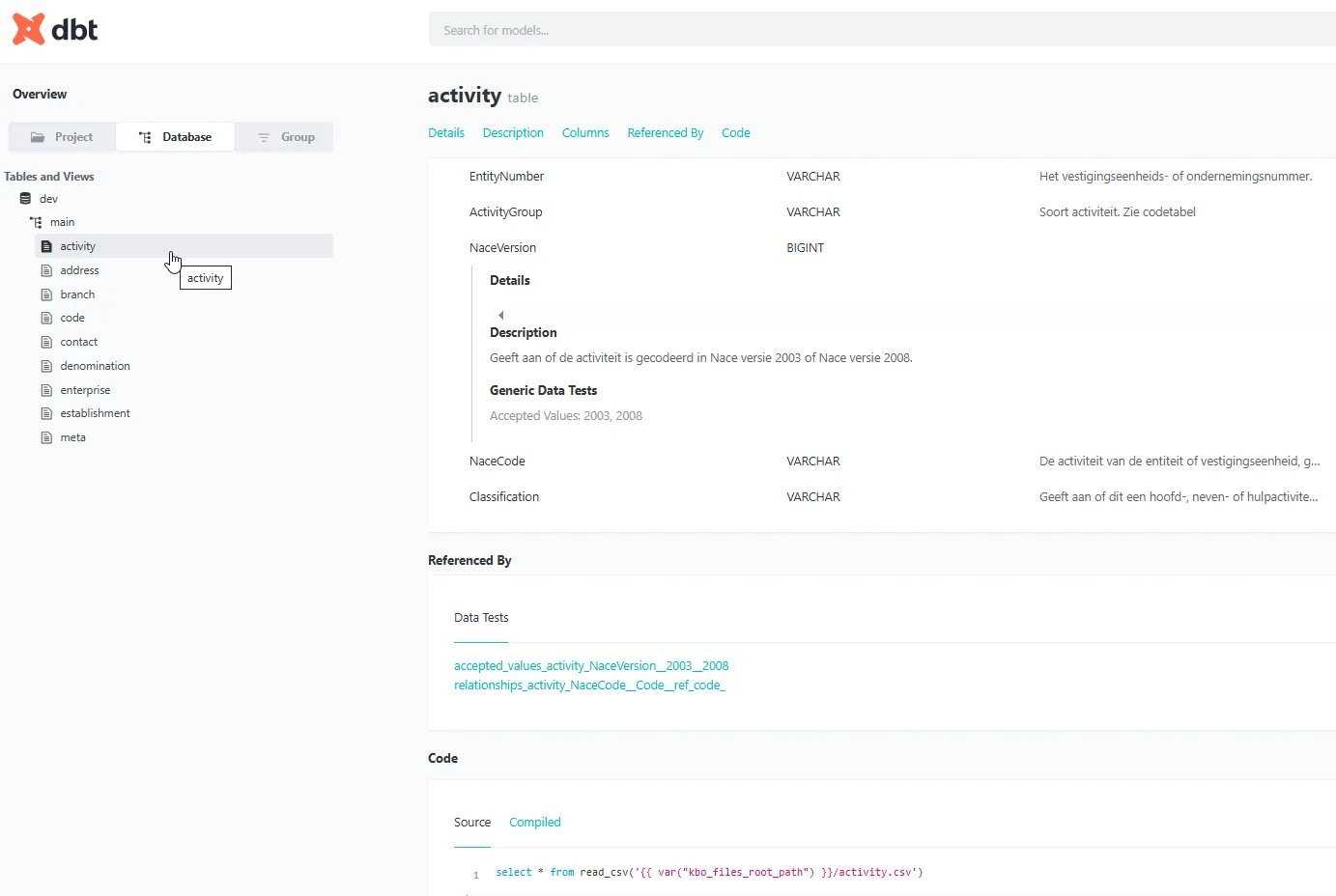

Now that we have the beginning of a dbt project, we can take a look at the dbt documentation.

dbt docs generate

dbt docs serve

Which opens a local webserver at localhost:8080

A screenshot of the generated dbt docs site, including table and column descriptions and tests.

Voila, now the data is ready for analysis, either further via dbt, or by connecting your favorite BI tool to the DuckDB database.